Stage 1: Local Agent Execution

Your on-ramp to CAF. No federation required.

Task

Implement and run a simple, persistent, stateful agentic application on a laptop or workstation, using local or remote LLMs.

Why This Matters

Local execution lets you develop and test agent logic before deploying to HPC. LangGraph and Academy specifications are reproducible and portable—the same agent definition runs locally or at scale.

Details

| Aspect | Value |

|---|---|

| Technologies | LangGraph, Academy |

| Where code runs | Laptop, workstation, VM |

| Scale | Single agent / small multi-agent |

| Status | Mature |

Architecture

Here we deal with agentic applications in which one or more agents operate entirely locally, each potentially calling tools and/or LLMs.

┌─────────────────────────────┐

│ Workstation │

│ │

│ ┌───────┐ ┌───────┐ │

│ │ Agent │───▶│ Tools │ │

│ └───┬───┘ └───────┘ │

│ │ │

│ ▼ │

│ ┌───────┐ │

│ │ LLM │ (API or local) │

│ └───────┘ │

└─────────────────────────────┘

Examples

Minimal Example: Calculator Agent

The simplest possible agent—an LLM that can use a calculator tool:

| Example | Framework | Code |

|---|---|---|

| AgentsCalculator | LangChain + LangGraph | View |

@tool

def calculate(expression: str) -> str:

"""Evaluate a mathematical expression."""

return str(eval(expression, {"__builtins__": {}}, {}))

llm = ChatOpenAI(model="gpt-4o-mini")

agent = create_react_agent(llm, [calculate])

agent.invoke({"messages": [HumanMessage(content="What is 347 * 892?")]})

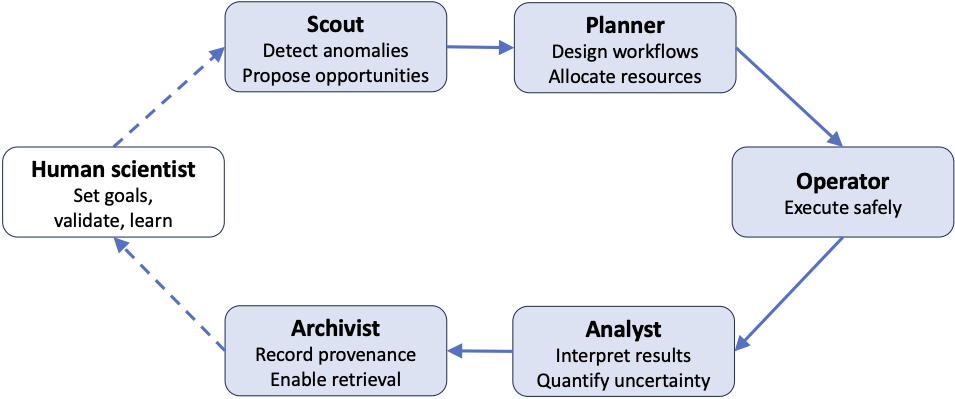

Five-Agent Scientific Discovery Pipeline

This more involved example demonstrates multi-agent coordination for scientific workflows. Five specialized agents work in sequence, each contributing domain expertise before passing results to the next:

| Agent | Role | Input | Output |

|---|---|---|---|

| Scout | Surveys problem space, detects anomalies | Goal | Research opportunities |

| Planner | Designs workflows, allocates resources | Opportunities | Workflow plan |

| Operator | Executes the planned workflow safely | Plan | Execution results |

| Analyst | Summarizes findings, quantifies uncertainty | Results | Analysis summary |

| Archivist | Documents everything for reproducibility | Summary | Documented provenance |

Implementations of Five-Agent Workflow

We provide implementations of this example in LangGraph and Academy, demonstrating different orchestration patterns. Note that these implementations are toys: they create agents that communicate, but each agent’s internal logic is just a stub.

| Example | Framework | Pattern | Code |

|---|---|---|---|

| AgentsLangGraph | LangGraph | Graph-based orchestration | View |

| AgentsAcademy | Academy | True pipeline (agent-to-agent) | View |

| AgentsAcademyHubSpoke | Academy | Hub-and-spoke (main orchestrates) | View |

Pattern comparison:

- LangGraph: StateGraph with typed state, edges define flow

- Academy Pipeline: Agents forward results directly to each other via messaging

- Academy Hub-and-Spoke: Main process orchestrates all agents sequentially

No LLM is used in the Academy examples—agent logic is stubbed to focus on the messaging patterns.

Dashboard Version

The dashboard version wraps agents with a full-screen Rich UI showing live progress across multiple scientific goals.

| Example | Framework | Features | Code |

|---|---|---|---|

| AgentsAcademyDashboard | Academy | Rich dashboard, multi-goal | View |